Classification with Python

In this notebook we try to practice all the classification algorithms that we have learned in this course.

We load a dataset using Pandas library, and apply the following algorithms, and find the best one for this specific dataset by accuracy evaluation methods.

Let’s first load required libraries:

import itertools

import numpy as np

import matplotlib.pyplot as plt

from matplotlib.ticker import NullFormatter

import pandas as pd

import numpy as np

import matplotlib.ticker as ticker

from sklearn import preprocessing

%matplotlib inline

About dataset

This dataset is about past loans. The Loan_train.csv data set includes details of 346 customers whose loan are already paid off or defaulted. It includes following fields:

| Field | Description |

|---|---|

| Loan_status | Whether a loan is paid off on in collection |

| Principal | Basic principal loan amount at the |

| Terms | Origination terms which can be weekly (7 days), biweekly, and monthly payoff schedule |

| Effective_date | When the loan got originated and took effects |

| Due_date | Since it’s one-time payoff schedule, each loan has one single due date |

| Age | Age of applicant |

| Education | Education of applicant |

| Gender | The gender of applicant |

Let’s download the dataset

!wget -O loan_train.csv https://cf-courses-data.s3.us.cloud-object-storage.appdomain.cloud/IBMDeveloperSkillsNetwork-ML0101EN-SkillsNetwork/labs/FinalModule_Coursera/data/loan_train.csv

--2023-01-26 13:26:05-- https://cf-courses-data.s3.us.cloud-object-storage.appdomain.cloud/IBMDeveloperSkillsNetwork-ML0101EN-SkillsNetwork/labs/FinalModule_Coursera/data/loan_train.csv

Resolving cf-courses-data.s3.us.cloud-object-storage.appdomain.cloud (cf-courses-data.s3.us.cloud-object-storage.appdomain.cloud)... 169.63.118.104

Connecting to cf-courses-data.s3.us.cloud-object-storage.appdomain.cloud (cf-courses-data.s3.us.cloud-object-storage.appdomain.cloud)|169.63.118.104|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 23101 (23K) [text/csv]

Saving to: ‘loan_train.csv’

loan_train.csv 100%[===================>] 22,56K --.-KB/s in 0,001s

2023-01-26 13:26:06 (28,9 MB/s) - ‘loan_train.csv’ saved [23101/23101]

Load Data From CSV File

df = pd.read_csv('loan_train.csv')

df.head()

| Unnamed: 0.1 | Unnamed: 0 | loan_status | Principal | terms | effective_date | due_date | age | education | Gender | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 0 | PAIDOFF | 1000 | 30 | 9/8/2016 | 10/7/2016 | 45 | High School or Below | male |

| 1 | 2 | 2 | PAIDOFF | 1000 | 30 | 9/8/2016 | 10/7/2016 | 33 | Bechalor | female |

| 2 | 3 | 3 | PAIDOFF | 1000 | 15 | 9/8/2016 | 9/22/2016 | 27 | college | male |

| 3 | 4 | 4 | PAIDOFF | 1000 | 30 | 9/9/2016 | 10/8/2016 | 28 | college | female |

| 4 | 6 | 6 | PAIDOFF | 1000 | 30 | 9/9/2016 | 10/8/2016 | 29 | college | male |

df.shape

(346, 10)

Convert to date time object

df['due_date'] = pd.to_datetime(df['due_date'])

df['effective_date'] = pd.to_datetime(df['effective_date'])

df.head()

| Unnamed: 0.1 | Unnamed: 0 | loan_status | Principal | terms | effective_date | due_date | age | education | Gender | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 0 | PAIDOFF | 1000 | 30 | 2016-09-08 | 2016-10-07 | 45 | High School or Below | male |

| 1 | 2 | 2 | PAIDOFF | 1000 | 30 | 2016-09-08 | 2016-10-07 | 33 | Bechalor | female |

| 2 | 3 | 3 | PAIDOFF | 1000 | 15 | 2016-09-08 | 2016-09-22 | 27 | college | male |

| 3 | 4 | 4 | PAIDOFF | 1000 | 30 | 2016-09-09 | 2016-10-08 | 28 | college | female |

| 4 | 6 | 6 | PAIDOFF | 1000 | 30 | 2016-09-09 | 2016-10-08 | 29 | college | male |

Data visualization and pre-processing

Let’s see how many of each class is in our data set

df['loan_status'].value_counts()

PAIDOFF 260

COLLECTION 86

Name: loan_status, dtype: int64

260 people have paid off the loan on time while 86 have gone into collection

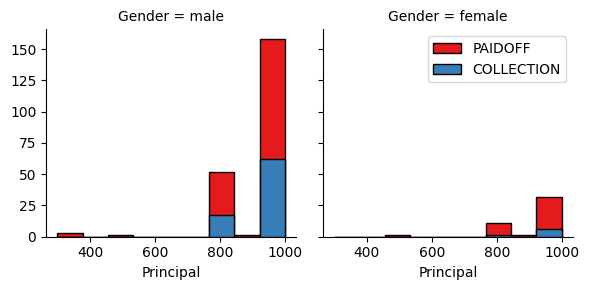

Let’s plot some columns to underestand data better:

# notice: installing seaborn might takes a few minutes

!conda install -c anaconda seaborn -y

zsh:1: command not found: conda

import seaborn as sns

bins = np.linspace(df.Principal.min(), df.Principal.max(), 10)

g = sns.FacetGrid(df, col="Gender", hue="loan_status", palette="Set1", col_wrap=2)

g.map(plt.hist, 'Principal', bins=bins, ec="k")

g.axes[-1].legend()

plt.show()

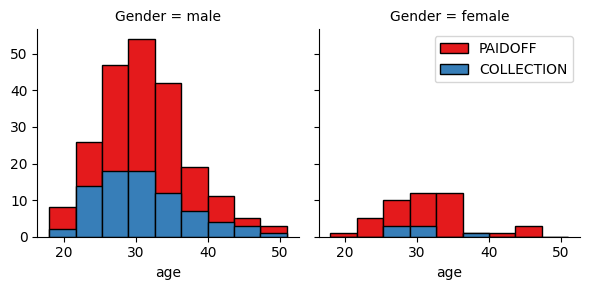

bins = np.linspace(df.age.min(), df.age.max(), 10)

g = sns.FacetGrid(df, col="Gender", hue="loan_status", palette="Set1", col_wrap=2)

g.map(plt.hist, 'age', bins=bins, ec="k")

g.axes[-1].legend()

plt.show()

Pre-processing: Feature selection/extraction

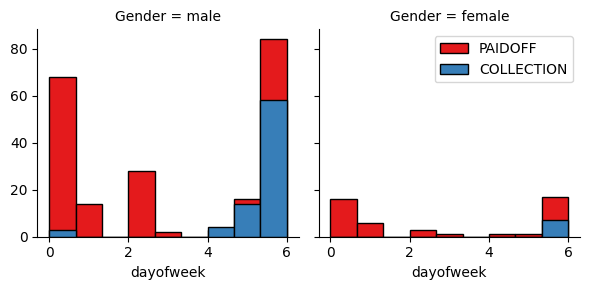

Let’s look at the day of the week people get the loan

df['dayofweek'] = df['effective_date'].dt.dayofweek

bins = np.linspace(df.dayofweek.min(), df.dayofweek.max(), 10)

g = sns.FacetGrid(df, col="Gender", hue="loan_status", palette="Set1", col_wrap=2)

g.map(plt.hist, 'dayofweek', bins=bins, ec="k")

g.axes[-1].legend()

plt.show()

We see that people who get the loan at the end of the week don’t pay it off, so let’s use Feature binarization to set a threshold value less than day 4

df['weekend'] = df['dayofweek'].apply(lambda x: 1 if (x>3) else 0)

df.head()

| Unnamed: 0.1 | Unnamed: 0 | loan_status | Principal | terms | effective_date | due_date | age | education | Gender | dayofweek | weekend | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 0 | PAIDOFF | 1000 | 30 | 2016-09-08 | 2016-10-07 | 45 | High School or Below | male | 3 | 0 |

| 1 | 2 | 2 | PAIDOFF | 1000 | 30 | 2016-09-08 | 2016-10-07 | 33 | Bechalor | female | 3 | 0 |

| 2 | 3 | 3 | PAIDOFF | 1000 | 15 | 2016-09-08 | 2016-09-22 | 27 | college | male | 3 | 0 |

| 3 | 4 | 4 | PAIDOFF | 1000 | 30 | 2016-09-09 | 2016-10-08 | 28 | college | female | 4 | 1 |

| 4 | 6 | 6 | PAIDOFF | 1000 | 30 | 2016-09-09 | 2016-10-08 | 29 | college | male | 4 | 1 |

Convert Categorical features to numerical values

Let’s look at gender:

df.groupby(['Gender'])['loan_status'].value_counts(normalize=True)

Gender loan_status

female PAIDOFF 0.865385

COLLECTION 0.134615

male PAIDOFF 0.731293

COLLECTION 0.268707

Name: loan_status, dtype: float64

86 % of female pay there loans while only 73 % of males pay there loan

Let’s convert male to 0 and female to 1:

df['Gender'].replace(to_replace=['male','female'], value=[0,1],inplace=True)

df.head()

| Unnamed: 0.1 | Unnamed: 0 | loan_status | Principal | terms | effective_date | due_date | age | education | Gender | dayofweek | weekend | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 0 | PAIDOFF | 1000 | 30 | 2016-09-08 | 2016-10-07 | 45 | High School or Below | 0 | 3 | 0 |

| 1 | 2 | 2 | PAIDOFF | 1000 | 30 | 2016-09-08 | 2016-10-07 | 33 | Bechalor | 1 | 3 | 0 |

| 2 | 3 | 3 | PAIDOFF | 1000 | 15 | 2016-09-08 | 2016-09-22 | 27 | college | 0 | 3 | 0 |

| 3 | 4 | 4 | PAIDOFF | 1000 | 30 | 2016-09-09 | 2016-10-08 | 28 | college | 1 | 4 | 1 |

| 4 | 6 | 6 | PAIDOFF | 1000 | 30 | 2016-09-09 | 2016-10-08 | 29 | college | 0 | 4 | 1 |

One Hot Encoding

How about education?

df.groupby(['education'])['loan_status'].value_counts(normalize=True)

education loan_status

Bechalor PAIDOFF 0.750000

COLLECTION 0.250000

High School or Below PAIDOFF 0.741722

COLLECTION 0.258278

Master or Above COLLECTION 0.500000

PAIDOFF 0.500000

college PAIDOFF 0.765101

COLLECTION 0.234899

Name: loan_status, dtype: float64

Features before One Hot Encoding

df[['Principal','terms','age','Gender','education']].head()

| Principal | terms | age | Gender | education | |

|---|---|---|---|---|---|

| 0 | 1000 | 30 | 45 | 0 | High School or Below |

| 1 | 1000 | 30 | 33 | 1 | Bechalor |

| 2 | 1000 | 15 | 27 | 0 | college |

| 3 | 1000 | 30 | 28 | 1 | college |

| 4 | 1000 | 30 | 29 | 0 | college |

Use one hot encoding technique to conver categorical varables to binary variables and append them to the feature Data Frame

Feature = df[['Principal','terms','age','Gender','weekend']]

Feature = pd.concat([Feature,pd.get_dummies(df['education'])], axis=1)

Feature.drop(['Master or Above'], axis = 1,inplace=True)

Feature.head()

| Principal | terms | age | Gender | weekend | Bechalor | High School or Below | college | |

|---|---|---|---|---|---|---|---|---|

| 0 | 1000 | 30 | 45 | 0 | 0 | 0 | 1 | 0 |

| 1 | 1000 | 30 | 33 | 1 | 0 | 1 | 0 | 0 |

| 2 | 1000 | 15 | 27 | 0 | 0 | 0 | 0 | 1 |

| 3 | 1000 | 30 | 28 | 1 | 1 | 0 | 0 | 1 |

| 4 | 1000 | 30 | 29 | 0 | 1 | 0 | 0 | 1 |

Feature Selection

Let’s define feature sets, X:

X = Feature

X[0:5]

| Principal | terms | age | Gender | weekend | Bechalor | High School or Below | college | |

|---|---|---|---|---|---|---|---|---|

| 0 | 1000 | 30 | 45 | 0 | 0 | 0 | 1 | 0 |

| 1 | 1000 | 30 | 33 | 1 | 0 | 1 | 0 | 0 |

| 2 | 1000 | 15 | 27 | 0 | 0 | 0 | 0 | 1 |

| 3 | 1000 | 30 | 28 | 1 | 1 | 0 | 0 | 1 |

| 4 | 1000 | 30 | 29 | 0 | 1 | 0 | 0 | 1 |

What are our lables?

y = df['loan_status'].values

y[0:5]

array(['PAIDOFF', 'PAIDOFF', 'PAIDOFF', 'PAIDOFF', 'PAIDOFF'],

dtype=object)

Normalize Data

Data Standardization give data zero mean and unit variance (technically should be done after train test split)

X= preprocessing.StandardScaler().fit(X).transform(X)

X[0:5]

array([[ 0.51578458, 0.92071769, 2.33152555, -0.42056004, -1.20577805,

-0.38170062, 1.13639374, -0.86968108],

[ 0.51578458, 0.92071769, 0.34170148, 2.37778177, -1.20577805,

2.61985426, -0.87997669, -0.86968108],

[ 0.51578458, -0.95911111, -0.65321055, -0.42056004, -1.20577805,

-0.38170062, -0.87997669, 1.14984679],

[ 0.51578458, 0.92071769, -0.48739188, 2.37778177, 0.82934003,

-0.38170062, -0.87997669, 1.14984679],

[ 0.51578458, 0.92071769, -0.3215732 , -0.42056004, 0.82934003,

-0.38170062, -0.87997669, 1.14984679]])

Classification

Now, it is your turn, use the training set to build an accurate model. Then use the test set to report the accuracy of the model You should use the following algorithm:

- K Nearest Neighbor(KNN)

- Decision Tree

- Support Vector Machine

- Logistic Regression

__ Notice:__

- You can go above and change the pre-processing, feature selection, feature-extraction, and so on, to make a better model.

- You should use either scikit-learn, Scipy or Numpy libraries for developing the classification algorithms.

- You should include the code of the algorithm in the following cells.

K Nearest Neighbor(KNN)

Notice: You should find the best k to build the model with the best accuracy.

warning: You should not use the loan_test.csv for finding the best k, however, you can split your train_loan.csv into train and test to find the best k.

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split( X, y, test_size=0.2, random_state=4)

print ('Train set:', X_train.shape, y_train.shape)

print ('Test set:', X_test.shape, y_test.shape)

Train set: (276, 8) (276,)

Test set: (70, 8) (70,)

from sklearn.neighbors import KNeighborsClassifier

from sklearn import metrics

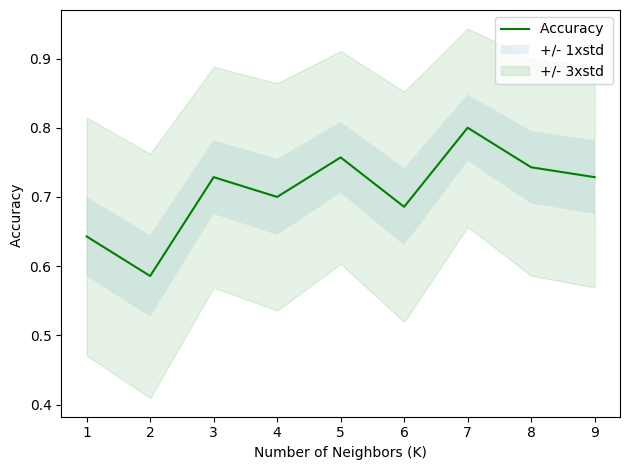

Ks = 10

mean_acc = np.zeros((Ks-1))

std_acc = np.zeros((Ks-1))

for n in range(1,Ks):

#Train Model and Predict

neigh = KNeighborsClassifier(n_neighbors = n).fit(X_train,y_train)

yhat=neigh.predict(X_test)

mean_acc[n-1] = metrics.accuracy_score(y_test, yhat)

std_acc[n-1]=np.std(yhat==y_test)/np.sqrt(yhat.shape[0])

mean_acc

array([0.64285714, 0.58571429, 0.72857143, 0.7 , 0.75714286,

0.68571429, 0.8 , 0.74285714, 0.72857143])

plt.plot(range(1,Ks),mean_acc,'g')

plt.fill_between(range(1,Ks),mean_acc - 1 * std_acc,mean_acc + 1 * std_acc, alpha=0.10)

plt.fill_between(range(1,Ks),mean_acc - 3 * std_acc,mean_acc + 3 * std_acc, alpha=0.10,color="green")

plt.legend(('Accuracy ', '+/- 1xstd','+/- 3xstd'))

plt.ylabel('Accuracy ')

plt.xlabel('Number of Neighbors (K)')

plt.tight_layout()

plt.show()

We can see that best k = 7.

k = 7

#Train Model and Predict

KNN = KNeighborsClassifier(n_neighbors = k).fit(X_train,y_train)

yhat = KNN.predict(X_test)

yhat[0:5]

array(['PAIDOFF', 'PAIDOFF', 'PAIDOFF', 'PAIDOFF', 'PAIDOFF'],

dtype=object)

print("Train set Accuracy: ", metrics.accuracy_score(y_train, KNN.predict(X_train)))

print("Test set Accuracy: ", metrics.accuracy_score(y_test, yhat))

Train set Accuracy: 0.8007246376811594

Test set Accuracy: 0.8

Decision Tree

from sklearn.tree import DecisionTreeClassifier

import sklearn.tree as tree

dTree = DecisionTreeClassifier(criterion="entropy", max_depth = 4)

dTree.fit(X_test,y_test)

predTree = dTree.predict(X_test)

print("DecisionTrees's Accuracy: ", metrics.accuracy_score(y_test, predTree))

DecisionTrees's Accuracy: 0.8571428571428571

Support Vector Machine

from sklearn import svm

clf = svm.SVC(kernel='rbf')

clf.fit(X_train, y_train)

SVC()In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

SVC()

yhat = clf.predict(X_test)

yhat [0:5]

array(['COLLECTION', 'PAIDOFF', 'PAIDOFF', 'PAIDOFF', 'PAIDOFF'],

dtype=object)

Logistic Regression

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import confusion_matrix

LR = LogisticRegression(C=0.01, solver='liblinear').fit(X_train,y_train)

yhat = LR.predict(X_test)

yhat_prob = LR.predict_proba(X_test)

Model Evaluation using Test set

from sklearn.metrics import jaccard_score

from sklearn.metrics import f1_score

from sklearn.metrics import log_loss

First, download and load the test set:

!wget -O loan_test.csv https://s3-api.us-geo.objectstorage.softlayer.net/cf-courses-data/CognitiveClass/ML0101ENv3/labs/loan_test.csv

--2023-01-26 13:26:07-- https://s3-api.us-geo.objectstorage.softlayer.net/cf-courses-data/CognitiveClass/ML0101ENv3/labs/loan_test.csv

Resolving s3-api.us-geo.objectstorage.softlayer.net (s3-api.us-geo.objectstorage.softlayer.net)... 67.228.254.196

Connecting to s3-api.us-geo.objectstorage.softlayer.net (s3-api.us-geo.objectstorage.softlayer.net)|67.228.254.196|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 3642 (3,6K) [text/csv]

Saving to: ‘loan_test.csv’

loan_test.csv 100%[===================>] 3,56K --.-KB/s in 0s

2023-01-26 13:26:07 (1,13 GB/s) - ‘loan_test.csv’ saved [3642/3642]

Load Test set for evaluation

test_df = pd.read_csv('loan_test.csv')

test_df.head()

| Unnamed: 0.1 | Unnamed: 0 | loan_status | Principal | terms | effective_date | due_date | age | education | Gender | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 1 | PAIDOFF | 1000 | 30 | 9/8/2016 | 10/7/2016 | 50 | Bechalor | female |

| 1 | 5 | 5 | PAIDOFF | 300 | 7 | 9/9/2016 | 9/15/2016 | 35 | Master or Above | male |

| 2 | 21 | 21 | PAIDOFF | 1000 | 30 | 9/10/2016 | 10/9/2016 | 43 | High School or Below | female |

| 3 | 24 | 24 | PAIDOFF | 1000 | 30 | 9/10/2016 | 10/9/2016 | 26 | college | male |

| 4 | 35 | 35 | PAIDOFF | 800 | 15 | 9/11/2016 | 9/25/2016 | 29 | Bechalor | male |

test_df['due_date'] = pd.to_datetime(test_df['due_date'])

test_df['effective_date'] = pd.to_datetime(test_df['effective_date'])

test_df.head()

| Unnamed: 0.1 | Unnamed: 0 | loan_status | Principal | terms | effective_date | due_date | age | education | Gender | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 1 | PAIDOFF | 1000 | 30 | 2016-09-08 | 2016-10-07 | 50 | Bechalor | female |

| 1 | 5 | 5 | PAIDOFF | 300 | 7 | 2016-09-09 | 2016-09-15 | 35 | Master or Above | male |

| 2 | 21 | 21 | PAIDOFF | 1000 | 30 | 2016-09-10 | 2016-10-09 | 43 | High School or Below | female |

| 3 | 24 | 24 | PAIDOFF | 1000 | 30 | 2016-09-10 | 2016-10-09 | 26 | college | male |

| 4 | 35 | 35 | PAIDOFF | 800 | 15 | 2016-09-11 | 2016-09-25 | 29 | Bechalor | male |

test_df['dayofweek'] = test_df['effective_date'].dt.dayofweek

test_df['weekend'] = test_df['dayofweek'].apply(lambda x: 1 if (x>3) else 0)

test_df['Gender'].replace(to_replace=['male','female'], value=[0,1],inplace=True)

X_test = test_df[['Principal','terms','age','Gender','weekend']]

X_test = pd.concat([X_test,pd.get_dummies(test_df['education'])], axis=1)

X_test.drop(['Master or Above'], axis = 1,inplace=True)

X_test = preprocessing.StandardScaler().fit(X_test).transform(X_test)

X_test[0:5]

array([[ 0.49362588, 0.92844966, 3.05981865, 1.97714211, -1.30384048,

2.39791576, -0.79772404, -0.86135677],

[-3.56269116, -1.70427745, 0.53336288, -0.50578054, 0.76696499,

-0.41702883, -0.79772404, -0.86135677],

[ 0.49362588, 0.92844966, 1.88080596, 1.97714211, 0.76696499,

-0.41702883, 1.25356634, -0.86135677],

[ 0.49362588, 0.92844966, -0.98251057, -0.50578054, 0.76696499,

-0.41702883, -0.79772404, 1.16095912],

[-0.66532184, -0.78854628, -0.47721942, -0.50578054, 0.76696499,

2.39791576, -0.79772404, -0.86135677]])

y_test = test_df['loan_status'].values

y_test[0:5]

array(['PAIDOFF', 'PAIDOFF', 'PAIDOFF', 'PAIDOFF', 'PAIDOFF'],

dtype=object)

yhat_KNN = KNN.predict(X_test)

Jaccard_KNN = jaccard_score(y_test, yhat_KNN,pos_label="PAIDOFF")

f1_KNN = f1_score(y_test, yhat_KNN, average='weighted')

yhat_dTree = dTree.predict(X_test)

Jaccard_dTree = jaccard_score(y_test, yhat_dTree,pos_label="PAIDOFF")

f1_dTree = f1_score(y_test, yhat_dTree, average='weighted')

yhat_SVM = clf.predict(X_test)

Jaccard_SVM = jaccard_score(y_test, yhat_SVM,pos_label="PAIDOFF")

f1_SVM = f1_score(y_test, yhat_SVM, average='weighted')

yhat_LR = LR.predict(X_test)

Jaccard_LR = jaccard_score(y_test, yhat_LR,pos_label="PAIDOFF")

f1_LR = f1_score(y_test, yhat_LR, average='weighted')

yhat_LR_prob = LR.predict_proba(X_test)

log_loss_LR = log_loss(y_test, yhat_LR_prob)

Report

You should be able to report the accuracy of the built model using different evaluation metrics:

| Algorithm | Jaccard | F1-score | LogLoss |

|---|---|---|---|

| KNN | ? | ? | NA |

| Decision Tree | ? | ? | NA |

| SVM | ? | ? | NA |

| LogisticRegression | ? | ? | ? |

print ('Algorithm |', 'Jaccard |', 'F1-score |', 'LogLoss |')

print ('--------------------------------------------------')

print ('KNN: ', "{:.2f} ".format(Jaccard_KNN), "{:.2f} ".format(f1_KNN), 'NA')

print ('Decision Tree: ', "{:.2f} ".format(Jaccard_dTree), "{:.2f} ".format(f1_dTree), 'NA')

print ('SVM: ', "{:.2f} ".format(Jaccard_SVM), "{:.2f} ".format(f1_SVM), 'NA')

print ('LogisticRegression:', "{:.2f} ".format(Jaccard_LR), "{:.2f} ".format(f1_LR), "{:.2f} ".format(log_loss_LR))

Algorithm | Jaccard | F1-score | LogLoss |

--------------------------------------------------

KNN: 0.65 0.65 NA

Decision Tree: 0.68 0.63 NA

SVM: 0.78 0.76 NA

LogisticRegression: 0.74 0.66 0.57

Want to learn more?

IBM SPSS Modeler is a comprehensive analytics platform that has many machine learning algorithms. It has been designed to bring predictive intelligence to decisions made by individuals, by groups, by systems – by your enterprise as a whole. A free trial is available through this course, available here: SPSS Modeler

Also, you can use Watson Studio to run these notebooks faster with bigger datasets. Watson Studio is IBM’s leading cloud solution for data scientists, built by data scientists. With Jupyter notebooks, RStudio, Apache Spark and popular libraries pre-packaged in the cloud, Watson Studio enables data scientists to collaborate on their projects without having to install anything. Join the fast-growing community of Watson Studio users today with a free account at Watson Studio

Thanks for completing this lesson!

Author: Saeed Aghabozorgi

Saeed Aghabozorgi, PhD is a Data Scientist in IBM with a track record of developing enterprise level applications that substantially increases clients’ ability to turn data into actionable knowledge. He is a researcher in data mining field and expert in developing advanced analytic methods like machine learning and statistical modelling on large datasets.

Change Log

| Date (YYYY-MM-DD) | Version | Changed By | Change Description |

|---|---|---|---|

| 2020-10-27 | 2.1 | Lakshmi Holla | Made changes in import statement due to updates in version of sklearn library |

| 2020-08-27 | 2.0 | Malika Singla | Added lab to GitLab |

<h3 align="center"> © IBM Corporation 2020. All rights reserved. <h3/>